OpenAI has been in hot water regarding data privacy ever since ChatGPT was first released to the public. The company used a lot of data from the public internet to train the large language model powering ChatGPT and other AI products. But that seems to have included copyrighted content. Some creators went ahead and sued OpenAI, and several governments have opened investigations.

Basic privacy protections, like opting out of training the AI with your data, were lacking for regular users, too. It took pressure from regulators for OpenAI to add privacy settings that let you remove your content so that it won’t be used to train ChatGPT.

Going forward, OpenAI plans to deploy a new tool called Media Manager that will let creators opt out of training ChatGPT and other models that power OpenAI products. The feature might have been introduced much later than some people expected, but it’s still a useful privacy upgrade.

OpenAI published a blog post on Tuesday detailing the new privacy tool, and explaining how it trains ChatGPT and other AI products. Media Manager will let creators identify their content to tell OpenAI they want it excluded from machine learning research and training.

Tech. Entertainment. Science. Your inbox.

Sign up for the most interesting tech & entertainment news out there.

By signing up, I agree to the Terms of Use and have reviewed the Privacy Notice.

Now, the bad news: the tool isn’t available yet. It will be ready by 2025, and OpenAI says it plans to introduce additional choices and features as it continues developing it. The company also hopes it will create a new industry standard.

Sora is OpenAI’s AI-based text-to-video generator. Image source: OpenAI

Sora is OpenAI’s AI-based text-to-video generator. Image source: OpenAI

OpenAI did not explain in great detail how Media Manager will work. But it has great ambitions for it, as it’ll cover all sorts of content, not just text that ChatGPT might encounter on the internet:

This will require cutting-edge machine learning research to build a first-ever tool of its kind to help us identify copyrighted text, images, audio, and video across multiple sources and reflect creator preferences.

OpenAI also noted that it’s working with creators, content owners, and regulators to develop the Media Manager tool.

How OpenAI trains ChatGPT and other models

The new blog post wasn’t just to announce the new Media Manager tool that might prevent ChatGPT and other AI products from accessing copyrighted content. It also reads as a declaration of the company’s good intentions about developing AI products that benefit users. And it sounds like a public defense against claims that ChatGPT and other OpenAI products might have used copyright content without authorization.

OpenAI actually explains how it trains its models and the steps it takes to prevent unauthorized content and user data from making it into ChatGPT.

The company also says it doesn’t retain any of the data it uses to teach its models. The models do not store data like a database. Also, each new generation of foundation models gets a new dataset for training.

After the training process is complete, the AI model does not retain access to data analyzed in training. ChatGPT is like a teacher who has learned from lots of prior study and can explain things because she has learned the relationships between concepts, but doesn’t store the materials in her head.

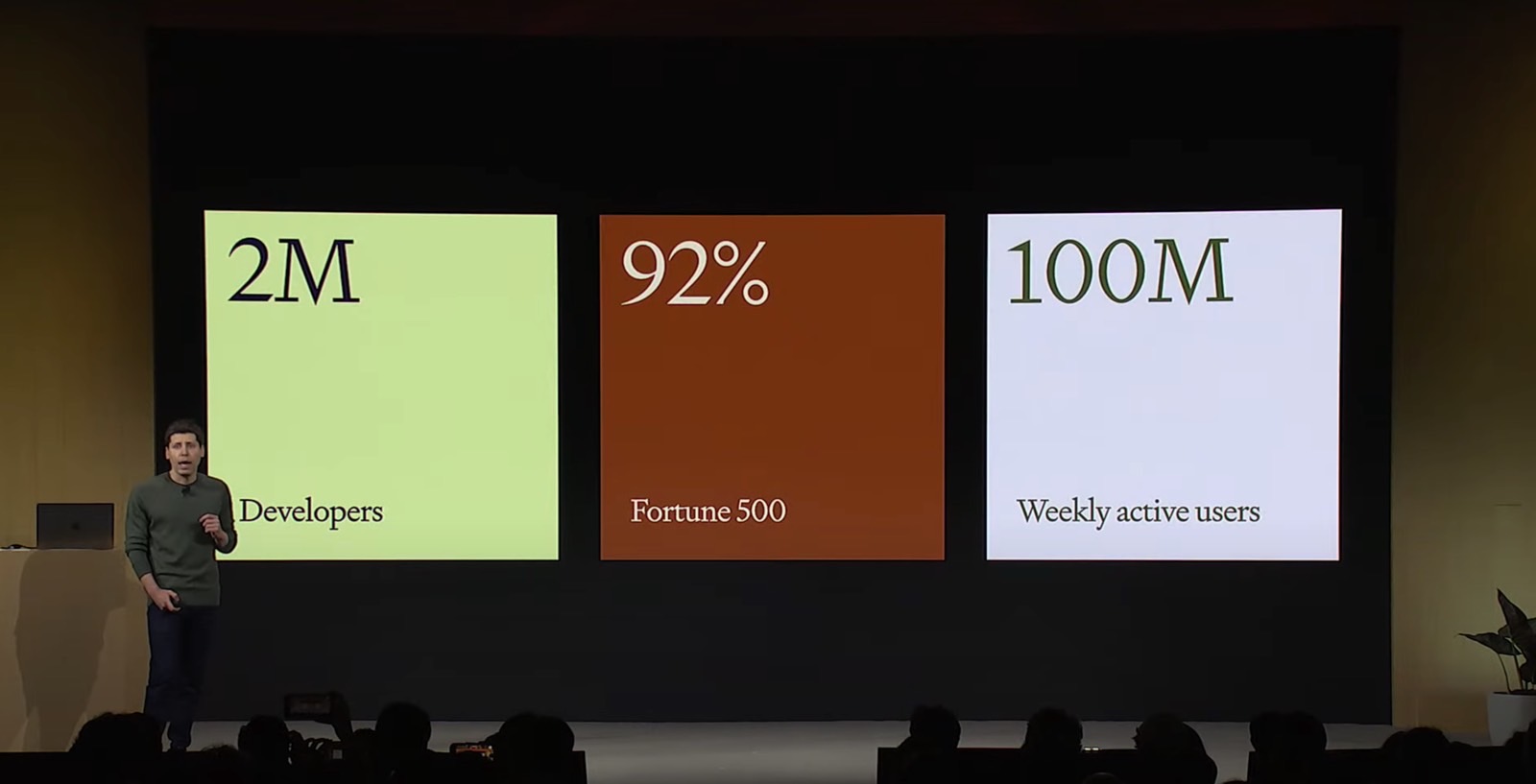

OpenAI DevDay keynote: ChatGPT usage in 2023. Image source: YouTube

OpenAI DevDay keynote: ChatGPT usage in 2023. Image source: YouTube

Furthermore, OpenAI said that ChatGPT and other models should not regurgitate content. When that happens, it must be a mistake at the training level.

If on rare occasions a model inadvertently repeats expressive content, it is a failure of the machine learning process. This failure is more likely to occur with content that appears frequently in training datasets, such as content that appears on many different public websites due to being frequently quoted. We employ state-of-the-art techniques throughout training and at output, for our API or ChatGPT, to prevent repetition, and we’re continually making improvements with on-going research and development.

The company also wants ample diversity to train ChatGPT and other AI models. That means content in many languages, covering various cultures, subjects, and industries.

“Unlike larger companies in the AI field, we do not have a large corpus of data collected over decades. We primarily rely on publicly available information to teach our models how to be helpful,” OpenAI adds.

The company uses data “mostly collected from industry-standard machine learning datasets and web crawls, similar to search engines.” It excludes sources with paywalls, those that aggregate personally identifiable information, and content that violates its policies.

OpenAI also uses data partnerships for content that’s not publicly available, like archives and metadata:

Our partners range from a major private video library for images and videos to train Sora to the Government of Iceland to help preserve their native languages. We don’t pursue paid partnerships for purely publicly available information.

The Sora mention is interesting, as OpenAI came under fire recently for not being able to fully explain how it trained the AI models used for its sophisticated text-to-video product.

Finally, human feedback also plays a part in training ChatGPT.

Regular ChatGPT users can also protect their data

OpenAI also reminds ChatGPT users that they can opt out of training the chatbot. These privacy features already exist, and they precede the Media Manager tool that’s currently in development. “Data from ChatGPT Team, ChatGPT Enterprise, or our API Platform” is not used to train ChatGPT.

Similarly, ChatGPT Free and Plus users can opt out of training the AI.