If you woke up this morning to all sorts of reports talking about putting glue on pizza so the cheese sticks better, it’s because that’s what Google Search will tell you to do. It’s all because of the Gemini-powered AI Overviews that appear at the top of the results.

Putting glue on pizza or any kind of food isn’t a good idea. I don’t need Google Search to tell me that. But what if some people take it seriously because it appears at the top of a Google Search? What if someone actually puts glue on pizza or other food?

I hope that never happens, of course. I’m just pointing out the obvious flaw of Google’s new AI Overviews that some people might still not be aware of. AI products like ChatGPT and Gemini still hallucinate. That’s something you should be aware of whenever you talk to AI. It’s not a problem as long as you know it happens, and how to check if the information you got is factual.

We’ve all known about AI hallucinations for a while now, so it’s not exactly a big deal in most cases. What is a massive issue, however, is when hallucinations take over one of the most trusted places on the internet: The top of a Google Search.

Tech. Entertainment. Science. Your inbox.

Sign up for the most interesting tech & entertainment news out there.

By signing up, I agree to the Terms of Use and have reviewed the Privacy Notice.

When ChatGPT debuted, reports of panic at Google HQ spread like wildfire. Google had to refocus on adding AI to everything, including Google Search. The company’s flagship product was threatened by ChatGPT.

I did replace Google Search, but it has nothing to do with ChatGPT, even though OpenAI’s chatbot is part of my current internet search experience. I use ChatGPT, Perplexity, and DuckDuckGo instead of Google Search. I purposely replaced Google long before the era of AI, and I would have done so even without genAI products becoming widely available. To put it bluntly, Google’s search results simply aren’t very good anymore, and they haven’t been for a long time.

ChatGPT and Perplexity came on the scene and made my search experience even better. And they always give me links so I can verify information.

Back to the early days of ChatGPT, reports also said that Google was very careful about adding AI to Search. The company worried about ruining the reputation of Google Search if AI hallucinates. Remember that Bard (the precursor of Gemini) did just that in one of the first demos Google offered. Google stock tanked immediately as a result.

Google then released Search SGE as the AI-powered alternative to Google Search. That seemed like a safer way to navigate the early days of the AI era. Google could test AI search results with a small audience, while Google Search was left untouched.

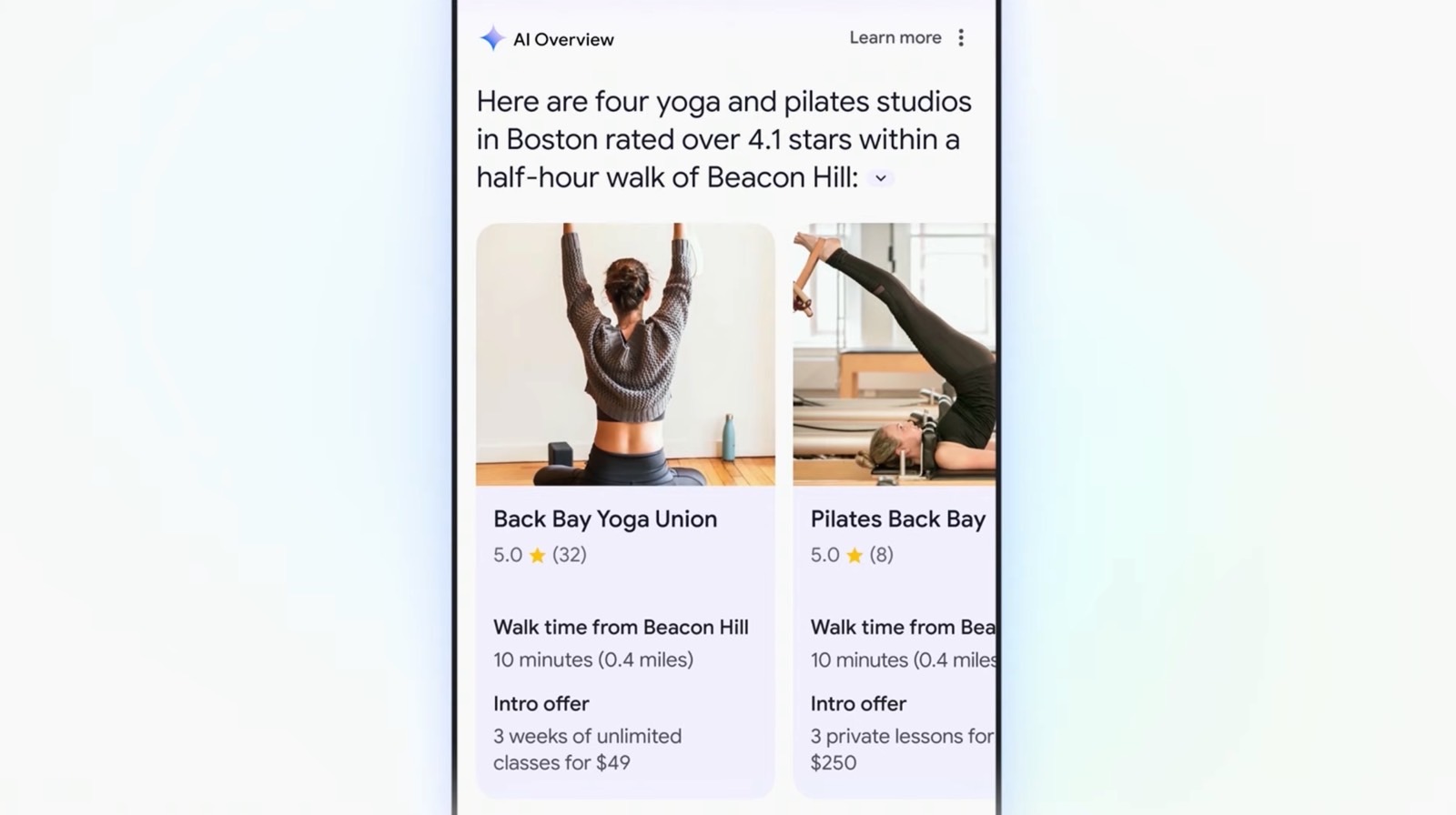

AI Overviews in Google Search aren’t for everyone Image source: Google Inc.

AI Overviews in Google Search aren’t for everyone Image source: Google Inc.

A year later, Google felt so confident in Gemini that it moved AI Overviews to the top of Google Search results. You can’t escape them, as that’s the new default, at least in the US. I haven’t seen AI Overviews in Europe, but they’ll be here eventually. Also, there are ways to get around AI Overviews in the US that don’t require you to ditch Google Search.

That said, Google Search hasn’t been my main search engine for a long time, and I’m not going back.

As for Google AI’s suggestion to put glue on pizza for cheese to stick better, the response comes from an 11-year-old joke on Reddit. A user complained about cheese falling off their pizza, and a Redditor by the name of Fucksmith gave them the following response:

To get the cheese to stick I recommend mixing about 1/8 cup of Elmer’s glue in with the sauce. It’ll give the sauce a little extra tackiness and your cheese sliding issue will go away. It’ll also add a little unique flavor. I like Elmer’s school glue, but any glue will work as long as it’s non-toxic.

Following Google’s $60 million-per-year deal to include info from Reddit in its search results, Google Search now suggests adding glue to your sauce to prevent cheese from sliding off your pizza. AI Overviews seen by Gizmodo and others replicate the advice the Redditor gave.

This isn’t the only instance of Gemini AI giving out wrong information. Gizmodo offers an example for a search asking which US president went to the University of Wisconsin-Madison. AI Overview will answer that 13 presidents attended the school, most of them long after they died. No US president came from Wisconsin or went to the university.

Google told Gizmodo the hallucinations mainly happen with uncommon questions:

The examples we’ve seen are generally very uncommon queries, and aren’t representative of most people’s experiences. The vast majority of AI Overviews provide high quality information, with links to dig deeper on the web. We conducted extensive testing before launching this new experience and will use these isolated examples as we continue to refine our systems overall.

But an X user posted a Google Search for “I’m feeling depressed,” where AI Overview delivered a solution that will leave you dumbfounded:

There are many things you can try to deal with your depression. One Reddit user suggests jumping off the Golden Gate Bridge.

An example of Google Search AI Overviews going terribly wrong. Image source: X.com

An example of Google Search AI Overviews going terribly wrong. Image source: X.com

Reddit’s dark sense of humor strikes again.

I’d venture a guess and say that “I’m feeling depressed” qualifies as a common question. A very serious one.

Maybe Google should get back to conducting extensive testing before re-launching the AI Overviews feature and let Google Search users do the googling themselves the old-fashioned way. Then again, you could just ditch Google Search and forget all about putting glue on your pizza.